Some time ago I started writing on a blog post about Pentaho Data Services, but then never came around to finish it. A few weeks ago Edwin Weber (of PDI Data Vault fame) gave a presentation on this particular topic at the Pentaho Netherlands Meetup. I kindly asked him to send me the slides and discovered that he had made quite some interesting findings, some of which I will describe below.

Setting up the Dev Environment

We will quickly spin up some Docker Containers for MySQL and MongoDB as well as install PDI and Teiid locally.

Docker Containers

MySQL

Let’s get a Docker Percona container running first. This assumes you have Docker installed on your machine:

$ docker pull percona:5.7

$ docker run \

--name myPerconaContainer \

-p 3306:3306 \

-e MYSQL_ROOT_PASSWORD=test \

-e MYSQL_DATABASE=test \

-e MYSQL_USER=test \

-e MYSQL_PASSWORD=test \

-d percona:5.7

Note: Make sure to provide a port mapping when starting the container, so external clients (so e.g. clients on your host system) like PDI can connect to it.

To check if the port is correctly exposed, run this:

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d3425237b274 percona:5.7 "/docker-entrypoint.s" About a minute ago Up About a minute 0.0.0.0:3306->3306/tcp myPerconaContainer

Alternatively, if you have Nmap installed run this:

$ docker-machine ps

192.168.99.100

$ sudo nmap -sT -O 192.168.99.100

Password:

Starting Nmap 7.01 ( https://nmap.org ) at 2016-05-03 19:32 BST

Nmap scan report for 192.168.99.100

Host is up (0.00048s latency).

Not shown: 998 closed ports

PORT STATE SERVICE

22/tcp open ssh

3306/tcp open mysql

MAC Address: 08:00:27:42:BB:F7 (Oracle VirtualBox virtual NIC)

Device type: general purpose

Running: Linux 3.X|4.X

OS CPE: cpe:/o:linux:linux_kernel:3 cpe:/o:linux:linux_kernel:4

OS details: Linux 3.2 - 4.0

Network Distance: 1 hop

OS detection performed. Please report any incorrect results at https://nmap.org/submit/ .

Nmap done: 1 IP address (1 host up) scanned in 1.99 seconds

As you can see, the port is correctly exposed (note that we asked docker machine for the IP - this is only necessary on Mac OS X and Windows, on Linux you should be able to just use localhost).

Next let’s check that MySQL DB is actually working (this creates another container which links to our previous one, you’ll land in the mysql command line client):

docker run -it --link some-percona:mysql --rm percona sh -c 'exec mysql -h"$MYSQL_PORT_3306_TCP_ADDR" -P"$MYSQL_PORT_3306_TCP_PORT" -uroot -p"$MYSQL_ENV_MYSQL_ROOT_PASSWORD"'

Also, use a local tool to cross check the connection:

$ mysql -utest -h192.168.99.100 -P3306

MongoDB

Now we also want to spin up a MongoDB Docker container:

$ docker pull mongo:3.3

$ docker run \

--name myMongoContainer \

-p 27017:27017 \

-d mongo:3.3

Use the mongodb command line tool or an app like Robomongo to check if the connection is working.

With the data store services ready, we can now shift our focus to PDI and TEEID, which we will install locally.

Note: If at any point you want to stop the containers, just run

docker stop <containerName>. And if you want to start them later on again:docker start <containerName>.

PDI

Download the latest version from Sourceforge and extract it in a convenient location. Then download the MySQL JDBC driver and add it to the PDI lib folder.

TEIID

You’ll find instructions on how to install TEIID in one of the next sections of this article.

Making Data available as a Service

For most of the example I will reuse the transfromations shown at the Meetup by Edwin Weber - a big thanks to him for sharing them!

Ok, let’s get started:

- Download the example files from here.

- Extract them in a convenient directory.

- Inside the extracted directory you’ll find a

my-versiondirectory. On the command line, move into this directory. - Next run

vi config/dev/.kettle/kettle.propertiesand adjust the database settings to your local setup. - Next adjust the

KETTLE_HOMEpath:vi shell-scripts/set-project-variables.sh. Then execute the same file. - Within the same Terminal window, navigate to your PDI install folder. Start Spoon.

- In Spoon connect to the file repository [[OPEN]] open all the jobs and transformations located in our project folder in the

difolder. - Execute the

job_load_data_for_virt_demojob.

Important: For this exercise we will work with a PDI file based repository, which we will register with Carte, so that all Data Services are available in an easy fashion.

Web Services (Carte REST service)

This feature has been available in PDI for quite some time and is fairly easy to set up: Just tick the Pass output to servlet option in the XML Output, JSON Output and Text Output steps. When you run the transformation on a Carte server, the step’s output will be exposed.

Find for more info here.

Example:

In a new Terminal window, navigate to the PDI install directory and run our set-project-variables.sh shell script (see instructions further up).

Start the Carte server (adjust project path):

source /Users/diethardsteiner/Dropbox/development/code-examples/pentaho/PDI/PDI-and-Teiid-data-virtualization/my-version/shell-scripts/set-project-variables.sh

sh ./carte.sh /Users/diethardsteiner/Dropbox/development/code-examples/pentaho/PDI/PDI-and-Teiid-data-virtualization/my-version/config/dev/carte.config.xml

The supplied Carte config also registers a PDI file repo, so that jobs and transformations are accessible.

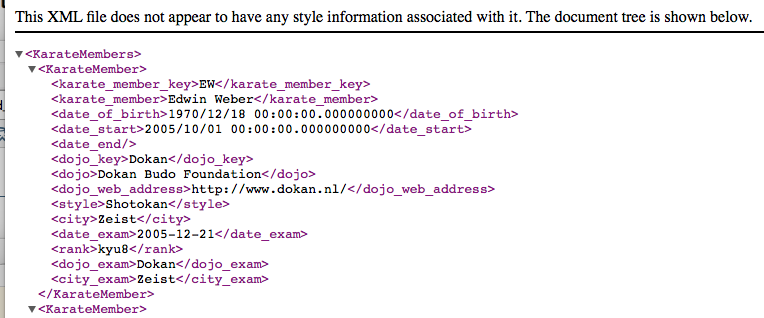

http://localhost:9081/kettle/executeTrans/?rep=pdi-data-services-example&user=admin&pass=password&trans=trf_demo_virt_karate_members

Important: When working with a repository, you must not include the file extension in the transformation name (in the above URL)!

Paste this into your favourite web browser and have fun!

Alternatively, if you were not using a repository, the approach is the following:

Open trf_demo_virt_karate_ranks, right click on the canvas and choose Properties. Copy the whole Transformation Filename and paste it into a good text editor. Mine looks like this:

/Users/diethardsteiner/Dropbox/development/code-examples/pentaho/PDI/PDI-and-Teiid-data-virtualization/my-version/di/trf_demo_virt_karate_ranks.ktr

Next replace the forward slashes with %2F to urlencode the path:

%2FUsers%2Fdiethardsteiner%2FDropbox%2Fdevelopment%2Fcode-examples%2Fpentaho%2FPDI%2FPDI-and-Teiid-data-virtualization%2Fmy-version%2Fdi%2Ftrf_demo_virt_karate_ranks.ktr

Finally we combine the above path with the web service call:

http://cluster:cluster@localhost:9081/kettle/executeTrans/?trans=%2FUsers%2Fdiethardsteiner%2FDropbox%2Fdevelopment%2Fcode-examples%2Fpentaho%2FPDI%2FPDI-and-Teiid-data-virtualization%2Fmy-version%2Fdi%2Ftrf_demo_virt_karate_ranks.ktr

Data Services (Kettle JDBC Thin Driver)

Previously known by the name Kettle JDBC Thin Driver, this feature was first announced around 2013 (see my initial blog post) and was shortly after only available in the EE version of PDI. At the end of 2015 Pentaho decided to make this feature available in the CE version.

The Metastore

The Data Service configuration details are saved in the Metastore. The Metastore is Pentaho’s attempt to create a generic and flexible metadata, data and configuration information store, which can be used by anyone for various purposes.

The XML file struture is composed of:

- A namespace

- A top level

<element>node, containing the basic<id>,<value>,<type>and<name>nodes. - The

<element>node moreover has a<children>node, which in turn can have nested<child>and<children>elements. A<child>node contains the same basic nodes as the top level element (<id>,<value>,<type>and<name>). - The

<element>node also has a<security>node, which defines the<owner>as well as the<owner-permissions-list>, explaining who has which permission to use the element.

In regards to the metastore file for the Pentaho Data Service there seems to be currently a bug/or limitation (I filed this JIRA case). Edwin Weber notes: “When I worked on a file, not in a file repository!, the metastore file got created when defining a step as a data service. Then I continued in a repository, creating these metastore files by hand.”

I had a look at both the metastore file and the PDI transformation XML file. Surprisingly enough I found metastore details in the PDI transfromation XML file as well, which seemed to be more detailed from what is written to the actual metastore file. I am not quite too sure if this was not just a consequence of me testing both the file repository as well as the normal file based approach or if this is a design feature. Furthermore it didn’t seem like the Carte server required the metastore file (I guess because the transformation file has all details in any case it already).

Defining a Pentaho Data Service

In your transformation, right click on a step and choose Data Services > New. In the Data Service dialog provide a sensible Service Name (which will be the name of the Virtual Table) and decide if you want to make use of any of the available options like Cache, Query Pushdown and Parameter Pushdown.

Pentaho Data Services have gained a few new features over the last few releases:

- Query Pushdown: A constrain (

WHEREclause) can be applied to the Table Input as well as MongoDB Input steps. - Parameter Pushdown: Can be applied to any step and supports simple constrains (e.g.

WHERE fruit = 'apple'). - Test Data Services: This dialog allows you to test/run any query against your data service, check the results and understand if it can be optimised.

- Driver Details: A very convenient dialog (new as of PDI v6.1) which list the JDBC class name and JDBC URL and also allows you to download the JDBC Driver.

The Data Service config will be stored in an XML file of the same name as the transformation in following directory:

~/.pentaho/metastore/pentaho/Data\ Service\ Transformation/

Note: You can customise the location of the Metastore by setting following parameters:

PENTAHO_METASTORE_FOLDER.

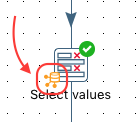

Note that once you define a Data Service for a given step, this step will show the Data Service icon:

Connecting to the Pentaho Data Source from 3rd Party Clients

As we are working with a PDI repository (which was registered with Carte), we can see all available Data Services by calling following REST service:

http://localhost:9081/kettle/listServices/

So, let’s see if we can query our tables from a SQL Client. For this example I’ll use NetBeans’ built-in SQL client, but the approach should be fairly similar with any other JDBC SQL client:

- In Spoon open any transformation which has a Data Service defined. Right click on the step for which you set up the Data Service and choose Data Service > Edit. Click on Driver Details and then click Get Driver. Save the JDBC driver in a convenient directory and unzip the file.

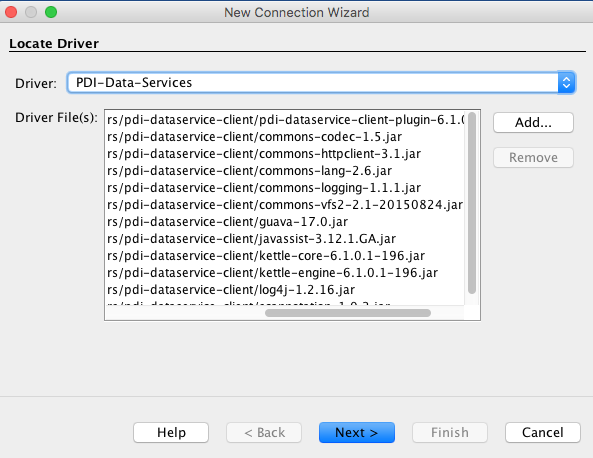

- In NetBeans click on the Services tab, then right click on Databases and choose New Connection.

-

Define a new Driver and then add the all the required files from the extracted JDBC Driver folder:

Basically just add all the jar files from the extracted JDBC folder to the Driver definition! Click Next.

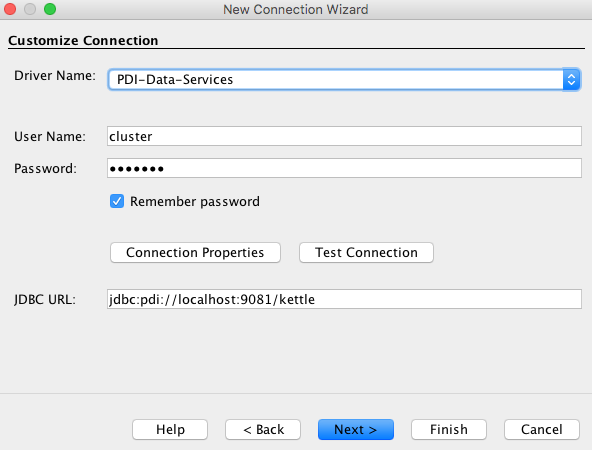

-

Next provide the connection details like so

jdbc:pdi://hostname:port/kettle. The hostname and port as well as user name and password will be the ones you specified for the Carte server, so in my case this translates to:jdbc:pdi://localhost:9081/kettle. Other SQL clients might also require the JDBC Driver class name:org.pentaho.di.trans.dataservice.jdbc.ThinDriver.

Click Finish and the connection should work now.

-

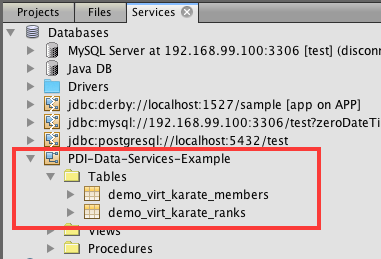

Expand the new connection node in the Services panel to see the tables:

-

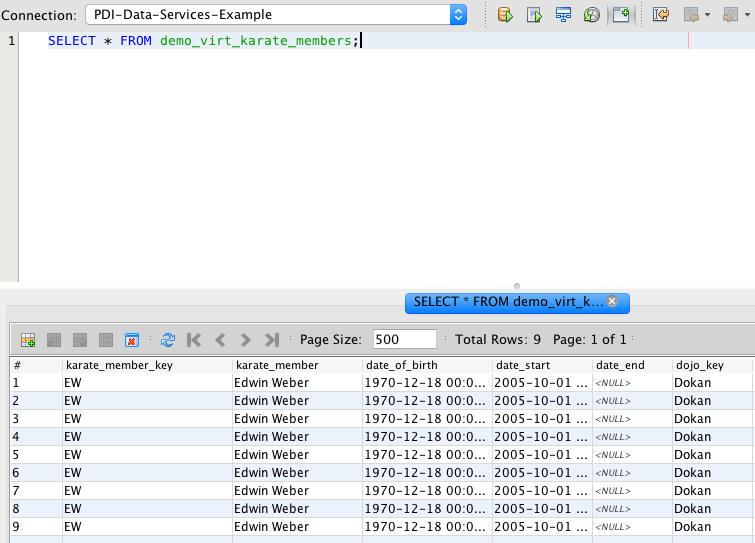

Right click on the new connection node in the Services panel and choose Execute command, which will open the SQL Editor. Write and run a query against any of our tables:

So far so good, what happens, however, if you try to join two tables? It won’t work! We shall solve this in a bit based on a solution suggested by Edwin Weber.

Connecting to the Pentaho Data Source from within PDI

I added the following connection details to our kettle.properties:

# PDI Data Services Connection

pdi_ds_host_name=localhost

pdi_ds_port_number=9081

pdi_ds_jdbc_driver_class_name=org.pentaho.di.trans.dataservice.jdbc.ThinDriver

pdi_ds_user_name=cluster

pdi_ds_password=cluster

Copy the JDBC Driver files to the pdi/lib directory.

Edwin Weber hightlighted that currently the connection type Pentaho Data Service does not work and hence the generic type should be used! This has mainly to do with the fact that for the Pentaho Data Service connection type the Pentaho EE DI Server is required.

The connection details look like this:

Open the tr_test_data_service_connection transformation and preview the output of the Table Input step:

Jens Bleuel mentioned: “The only difference I can think of you mean, there is a slightly different URL (with the addition of the WebApp name for the DI-Server). For the Carte server, you need to change the Options tab within the Pentaho Data Service connection and remove the webappname parameter. I agree that this is pretty much hidden. This will most likely be simplified in 7.0.”

Teiid - The Virtualisation Layer

Introduction and Installation

As we previously learnt, joining two Kettle virtual tables in a SQL Client results in an error. In his meetup talk, Edwin Weber demoed Teiid to solve just this issue. The open source project Teidd (sponsored by RedHat) is billed as “a data virtualization system that allows appliations to use data from multiple, heterogenous data stores”. There is also a dedicated Teiid Designer available for creating and maintaining Teiid metadata (virtual tables). There is even a Teiid Dashboard web application (which is a customised version of JBoss Dashboard Build) available. These slides provide a good introduction to RedHat Data Virtualisation.

Download the following from here:

- Teiid Runtime

- JDBC Driver

Download Teiid Designer. Add the bottom of this page you find a section called Install into Eclipse 4.5 (Mars) - follow these instructions (requires separate download of Eclipse Mars).

Then consult the Teiid Documentation; we are especially interested in the Installation Guide. There is also a very good Examples page with instructions on how to connect to various DBs, XML, Flat files, web services etc.

The Basics are that Teiid consists of following components:

- Virtual Databases

- Models

- Connectors

- Data Services

- SOAs

Let’s get started:

Extract Teiid Runtime zip folder, which is basically just a JBoss server (or WildFly server as it is called these days). Note that the docs/teiid folder contains a huge amount of examples to get started.

Now, on your command line navigate into this folder and run the following:

sh ./bin/standalone.sh -c=standalone-teiid.xml

To access the Admin Panel go to following ULR http://127.0.0.1:9990. You will see a notice that the server is running but that no user has beeen set up. To remedy this, open a new terminal window/tab, navigate to the server folder and create an Admin User by running the following:

$ sh ./bin/add-user.sh

On the first prompt just hit enter, next provide a user name and then a password. The user does not belong to any group. When asked if this new user is going to be used for one AS process to connect to another AS process, answer yes.

Once this is done, in your web browser, click on the Try again link on the WildFly page. You should then be asked for a user name and password.

A good quick starter guide is available here.

Now, we also add an application user, which we will use for the JDBC access:

$ sh ./bin/add-user.sh

What type of user do you wish to add?

a) Management User (mgmt-users.properties)

b) Application User (application-users.properties)

(a): b

Enter the details of the new user to add.

Using realm 'ApplicationRealm' as discovered from the existing property files.

Username : diethardsteiner

Password recommendations are listed below. To modify these restrictions edit the add-user.properties configuration file.

- The password should be different from the username

- The password should not be one of the following restricted values {root, admin, administrator}

- The password should contain at least 8 characters, 1 alphabetic character(s), 1 digit(s), 1 non-alphanumeric symbol(s)

Password :

WFLYDM0102: Password should have at least 1 non-alphanumeric symbol.

Are you sure you want to use the password entered yes/no? yes

Re-enter Password :

What groups do you want this user to belong to? (Please enter a comma separated list, or leave blank for none)[ ]: odata

About to add user 'diethardsteiner' for realm 'ApplicationRealm'

Is this correct yes/no? yes

Added user 'diethardsteiner' to file '/Applications/Development/teiid-8.13.4/standalone/configuration/application-users.properties'

Added user 'diethardsteiner' to file '/Applications/Development/teiid-8.13.4/domain/configuration/application-users.properties'

Added user 'diethardsteiner' with groups odata to file '/Applications/Development/teiid-8.13.4/standalone/configuration/application-roles.properties'

Added user 'diethardsteiner' with groups odata to file '/Applications/Development/teiid-8.13.4/domain/configuration/application-roles.properties'

Is this new user going to be used for one AS process to connect to another AS process?

e.g. for a slave host controller connecting to the master or for a Remoting connection for server to server EJB calls.

yes/no? no

Make sure that you assign the user the group oadata.

Adding the Pentaho Data Services JDBC Driver as a Core Module

Source: Install a JDBC Driver as a Core Module

The next step is to add the Pentaho Data Services JDBC Driver to WildFly: Copy the pentaho-data-services folder into <wildfly-root>/modules.

Create following folder structure:

cd <wildfly-root>/modules

mkdir -p org/pentaho/di/trans/dataservice/jdbc/ThinDriver/main

We will have to add a Wildfly specific module.xml to this folder (you can copy this file from one of the other folders in this subdirectory and adjust it or just copy the contents shown below):

<?xml version="1.0" encoding="UTF-8"?>

<module xmlns="urn:jboss:module:1.0" name="org.pentaho.di.trans.dataservice.jdbc.ThinDriver">

<resources>

<resource-root path="commons-codec-1.5.jar"/>

<resource-root path="commons-httpclient-3.1.jar"/>

<resource-root path="commons-lang-2.6.jar"/>

<resource-root path="commons-logging-1.1.1.jar"/>

<resource-root path="commons-vfs2-2.1-20150824.jar"/>

<resource-root path="guava-17.0.jar"/>

<resource-root path="javassist-3.12.1.GA.jar"/>

<resource-root path="kettle-core-6.1.0.1-196.jar"/>

<resource-root path="kettle-engine-6.1.0.1-196.jar"/>

<resource-root path="log4j-1.2.16.jar"/>

<resource-root path="pdi-dataservice-client-plugin-6.1.0.1-196.jar"/>

<resource-root path="scannotation-1.0.2.jar"/>

</resources>

<dependencies>

<module name="javax.api"/>

<module name="javax.transaction.api"/>

</dependencies>

</module>

Next add all the JDBC driver files to this directory.

Important: It seems like it is best practice to stop the server now and make the config changes. When I was changing

standalone-teeid.xmlwhile the server as running and later on restarted the server, my changes to the file seemed to have gone.

Creating Virtual Tables the Manual Way

Creating Connections and Drivers

Now we will create a Virtual Database (VDB) in Teiid, which will access the Pentaho Virtual Tables and allow us to query them (both individually as well as in a JOIN). We could also create a table/view in Teiid to be based on these source tables.

As the documentation states, the usual workflow is the following:

- Configuring the connections to the data sources.

- Creating the VDB file

- Start the WildFly/Teiid Server

- Deploy the VDB file

- Access the VDB using JDBC application. (ODBC is left as exercise to the user)

First off, we will create the connection/data source: Open the <wildfly>/standalone/configuration/standalone-teiid.xml file. When accessing a standard SQL Database, just add a <datasource> within the <datasources> section:

<datasource jta="true" jndi-name="java:/pdi-dataservice-karate-connector" pool-name="pdi-dataservice-karate" enabled="true" use-ccm="true">

<connection-url>jdbc:pdi://localhost:9081/kettle</connection-url>

<driver>pdi-dataservice-client-driver</driver>

<security>

<user-name>cluster</user-name>

<password>cluster</password>

</security>

</datasource>

Just a little bit further down you can find the <drivers> section. Add the following:

<driver name="pdi-dataservice-client-driver" module="org.pentaho.di.trans.dataservice.jdbc.ThinDriver">

<driver-class>org.pentaho.di.trans.dataservice.jdbc.ThinDriver</driver-class>

</driver>

Important: The

modulename must be the same as the one you defined when adding the JDBC driver in themodule.xml.

Creating the Virtual Database File

Next let’s create the Virtual Database file:

<vdb name="Karate" version="1">

<description>The Karate VDB</description>

<model name="Karate" type="PHYSICAL">

<source name="pdi-dataservice-karate" translator-name="jdbc-ansi" connection-jndi-name="java:/pdi-dataservice-karate-connector"/>

</model>

</vdb>

Save this file as teiid-vdb-karate.xml.

Deploying the Virtual Database File

Copy the karate-vdb.xml to <wildfly>/standalone/deployments. You should see a file in the same folder with the extension deployed. Also, the logs should show following message: deployed "teiid-vdb-karate.xml"

If you started WildFly/JBoss in debug mode, you will see following error messages:

19:22:32,319 ERROR [stderr] (Worker0_async-teiid-threads0) Caused by: org.pentaho.di.core.exception.KettleFileException:

19:22:32,319 ERROR [stderr] (Worker0_async-teiid-threads0)

19:22:32,319 ERROR [stderr] (Worker0_async-teiid-threads0) Unable to get VFS File object for filename 'plugins' : Could not find file with URI "/Applications/Development/teiid-8.13.4/plugins" because it is a relative path, and no base URI was provided.

19:22:32,319 ERROR [stderr] (Worker0_async-teiid-threads0)

19:22:32,319 ERROR [stderr] (Worker0_async-teiid-threads0)

19:22:32,320 ERROR [stderr] (Worker0_async-teiid-threads0) at org.pentaho.di.core.vfs.KettleVFS.getFileObject(KettleVFS.java:158)

19:22:32,320 ERROR [stderr] (Worker0_async-teiid-threads0) at org.pentaho.di.core.vfs.KettleVFS.getFileObject(KettleVFS.java:106)

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0) at org.pentaho.di.core.vfs.KettleVFS.getFileObject(KettleVFS.java:102)

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0) at org.pentaho.di.core.plugins.PluginFolder.findJarFiles(PluginFolder.java:124)

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0) ... 39 more

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0) org.pentaho.di.core.exception.KettleFileException:

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0)

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0) Unable to list jar files in plugin folder '/Users/diethardsteiner/.kettle/plugins'

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0)

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0)

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0) Unable to get VFS File object for filename '/Users/diethardsteiner/.kettle/plugins' : Could not find file with URI "/Users/diethardsteiner/.kettle/plugins" because it is a relative path, and no base URI was provided.

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0)

And further down:

19:22:32,719 WARN [org.teiid.RUNTIME] (Worker0_async-teiid-threads0) TEIID50036 VDB Karate.1 model "Karate" metadata failed to load. Reason:TEIID11010 java.sql.SQLException: org.pentaho.di.core.exception.KettleValueException:

Unexpected conversion error while converting value [NULLABLE Integer] to an Integer

java.lang.Integer cannot be cast to java.lang.Long

: org.teiid.translator.TranslatorException: TEIID11010 java.sql.SQLException: org.pentaho.di.core.exception.KettleValueException:

Unexpected conversion error while converting value [NULLABLE Integer] to an Integer

java.lang.Integer cannot be cast to java.lang.Long

at org.teiid.translator.jdbc.JDBCExecutionFactory.getMetadata(JDBCExecutionFactory.java:297)

at org.teiid.translator.jdbc.JDBCExecutionFactory.getMetadata(JDBCExecutionFactory.java:68)

at org.teiid.query.metadata.NativeMetadataRepository.getMetadata(NativeMetadataRepository.java:92)

at org.teiid.query.metadata.NativeMetadataRepository.loadMetadata(NativeMetadataRepository.java:60)

at org.teiid.query.metadata.ChainingMetadataRepository.loadMetadata(ChainingMetadataRepository.java:55)

As there is already a problem at this stage, there is not much point progressing any further, but for completeness sake, I’ll provide a brief description. I spend quite a few hours trying to figure what was going on. The thing is that I couldn’t even registered Pentaho Data Services as standard data connection within Eclipse Mars Data Source Explorer (part of the Eclipse Data Tools Platform (DTP)). So this is already very strange. Maybe there is a JDBC driver problem? The PDI EE version however works fine. I created this Jira case therefor. More details in the Encountered Errors section further down this article.

Connecting to the Teiid Virtual DB from a SQL Client

jdbc:teiid:karate.1@mm://localhost:31000

The JDBC URL starts with jdbc:teiid:. This is followed by the VBA Name and version, so <VBA-NAME>.<VERSION>. In our example, in the VBA XML we defined this: <vdb name="Karate" version="1">, which translates to Karate.1 for the JDBC URL. Also note that you have to use the Application user credentials (not the Admin user credentials).

How to resolve problems standard Teiid setup problems

If you are having troubles establishing a connection, it is best you stop the server and start it in debug mode to see more detailed error messages:

sh ./bin/standalone.sh -c=standalone-teiid.xml --debug

You might find error messages like this one:

21:01:43,445 INFO [org.teiid.RUNTIME] (MSC service thread 1-5) TEIID50029 VDB Karate.1 model "Karate" metadata is currently being loaded. Start Time: 10/05/16 21:01

21:01:43,453 WARN [org.teiid.RUNTIME] (Worker0_async-teiid-threads0) TEIID50036 VDB Karate.1 model "Karate" metadata failed to load. Reason:TEIID31097 Metadata load requires a connection factory: TEIID30481 Failed to find the Connection Factory with JNDI name java:/pdi-datasource-karate-connector. Please check the name or deploy the Connection Factory with specified name.: org.teiid.translator.TranslatorException: TEIID31097 Metadata load requires a connection factory

at org.teiid.query.metadata.NativeMetadataRepository.loadMetadata(NativeMetadataRepository.java:55)

at org.teiid.query.metadata.ChainingMetadataRepository.loadMetadata(ChainingMetadataRepository.java:55)

Encounted Errors

If you started WildFly/JBoss in debug mode, you will see following error messages:

19:22:32,319 ERROR [stderr] (Worker0_async-teiid-threads0) Caused by: org.pentaho.di.core.exception.KettleFileException:

19:22:32,319 ERROR [stderr] (Worker0_async-teiid-threads0)

19:22:32,319 ERROR [stderr] (Worker0_async-teiid-threads0) Unable to get VFS File object for filename 'plugins' : Could not find file with URI "/Applications/Development/teiid-8.13.4/plugins" because it is a relative path, and no base URI was provided.

19:22:32,319 ERROR [stderr] (Worker0_async-teiid-threads0)

19:22:32,319 ERROR [stderr] (Worker0_async-teiid-threads0)

19:22:32,320 ERROR [stderr] (Worker0_async-teiid-threads0) at org.pentaho.di.core.vfs.KettleVFS.getFileObject(KettleVFS.java:158)

19:22:32,320 ERROR [stderr] (Worker0_async-teiid-threads0) at org.pentaho.di.core.vfs.KettleVFS.getFileObject(KettleVFS.java:106)

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0) at org.pentaho.di.core.vfs.KettleVFS.getFileObject(KettleVFS.java:102)

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0) at org.pentaho.di.core.plugins.PluginFolder.findJarFiles(PluginFolder.java:124)

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0) ... 39 more

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0) org.pentaho.di.core.exception.KettleFileException:

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0)

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0) Unable to list jar files in plugin folder '/Users/diethardsteiner/.kettle/plugins'

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0)

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0)

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0) Unable to get VFS File object for filename '/Users/diethardsteiner/.kettle/plugins' : Could not find file with URI "/Users/diethardsteiner/.kettle/plugins" because it is a relative path, and no base URI was provided.

19:22:32,321 ERROR [stderr] (Worker0_async-teiid-threads0)

And further down:

19:22:32,719 WARN [org.teiid.RUNTIME] (Worker0_async-teiid-threads0) TEIID50036 VDB Karate.1 model "Karate" metadata failed to load. Reason:TEIID11010 java.sql.SQLException: org.pentaho.di.core.exception.KettleValueException:

Unexpected conversion error while converting value [NULLABLE Integer] to an Integer

java.lang.Integer cannot be cast to java.lang.Long

: org.teiid.translator.TranslatorException: TEIID11010 java.sql.SQLException: org.pentaho.di.core.exception.KettleValueException:

Unexpected conversion error while converting value [NULLABLE Integer] to an Integer

java.lang.Integer cannot be cast to java.lang.Long

at org.teiid.translator.jdbc.JDBCExecutionFactory.getMetadata(JDBCExecutionFactory.java:297)

at org.teiid.translator.jdbc.JDBCExecutionFactory.getMetadata(JDBCExecutionFactory.java:68)

at org.teiid.query.metadata.NativeMetadataRepository.getMetadata(NativeMetadataRepository.java:92)

at org.teiid.query.metadata.NativeMetadataRepository.loadMetadata(NativeMetadataRepository.java:60)

at org.teiid.query.metadata.ChainingMetadataRepository.loadMetadata(ChainingMetadataRepository.java:55)

#### Final Remarks

As you can see, it is fairly easy to set up Virtualised Tables in Teiid. We only took a look at defining JDBC data sources. In his Meetup Demo Edwin also demoed accessing the PDI Web Service via Teiid, effectively eliminating the need to copy JDBC drivers to the web server.

Using Teiid Designer

There is a very good Examples page with instructions on how to connect to various DBs, XML, Flat files, web services etc. We will follow this guide.

Installation

Teiid Designer is an Eclipse plugin. Download Eclipse Mars and make sure it uses Java 8 by adjusting the eclipse.ini (see here for details). Teiid Designer requires Java 8!

Next follow the plugin instructions outlined here. Make sure you follow the instructions at the end of the page, just below the heading Install into Eclipse 4.5 (Mars).

Once you installed the plugin switch to the Teiid Designer perspective.

Creating the VDB

First let’s register our server:

Click on the No default server defined link on the left hand side panel. Just follow the instruction. On the 3rd screen set the path to the Teiid server install directory.

Next click on Define Teiid Model Project in the right hand side panel under Modeling Actions. Our project’s name is Karate. Accept all the defaults.

Now click on Create JDBC Connection in the right hand side panel under Modeling Actions

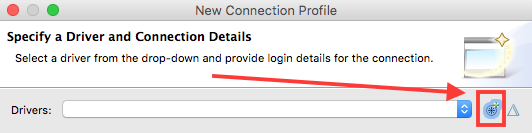

In the New Connection Profile window choose the Generic JDBC and provide a name (e.g. Karate).

On the next screen click on the New Driver Definition icon:

On the next screen make sure to highlight Generic JDBC Driver so that all of the other fields can be edited. Set Driver Name to Pentaho Data Services.

Click on the Jar List tab and then choose Add jar/zip. Choose the Pentaho Data Services JDBC extracted zip folder you originally downloaded and add all the jar files. Next click on the Properties tab and provide the following:

| Property | Value |

|---|---|

| Connection URL | jdbc:pdi://hostname:port/kettle |

| Driver Class | org.pentaho.di.trans.dataservice.jdbc.ThinDriver |

Then click OK.

Back in the New Connection Profile window provide the user name and password (cluster and cluster in our case). You have to define a value for the Database name, which can be anything, as it will not be used. Click the Test connection button. All should be fine.

Once I clicked OK I got a StackOverflow error message. Strangely enough I also couldn’t get a Pentaho Data Services connection working in DBeaver, which is Eclipse based as well.

Special thanks also to Dan Keeley for testing the connection in Eclipse to confirm problem.